However, it’s still cheaper than its competitors.

The new chatbot from DeepSeek introduced itself to me with this engaging description:

Hi, I was created so you can ask anything and get an answer that might even surprise you.

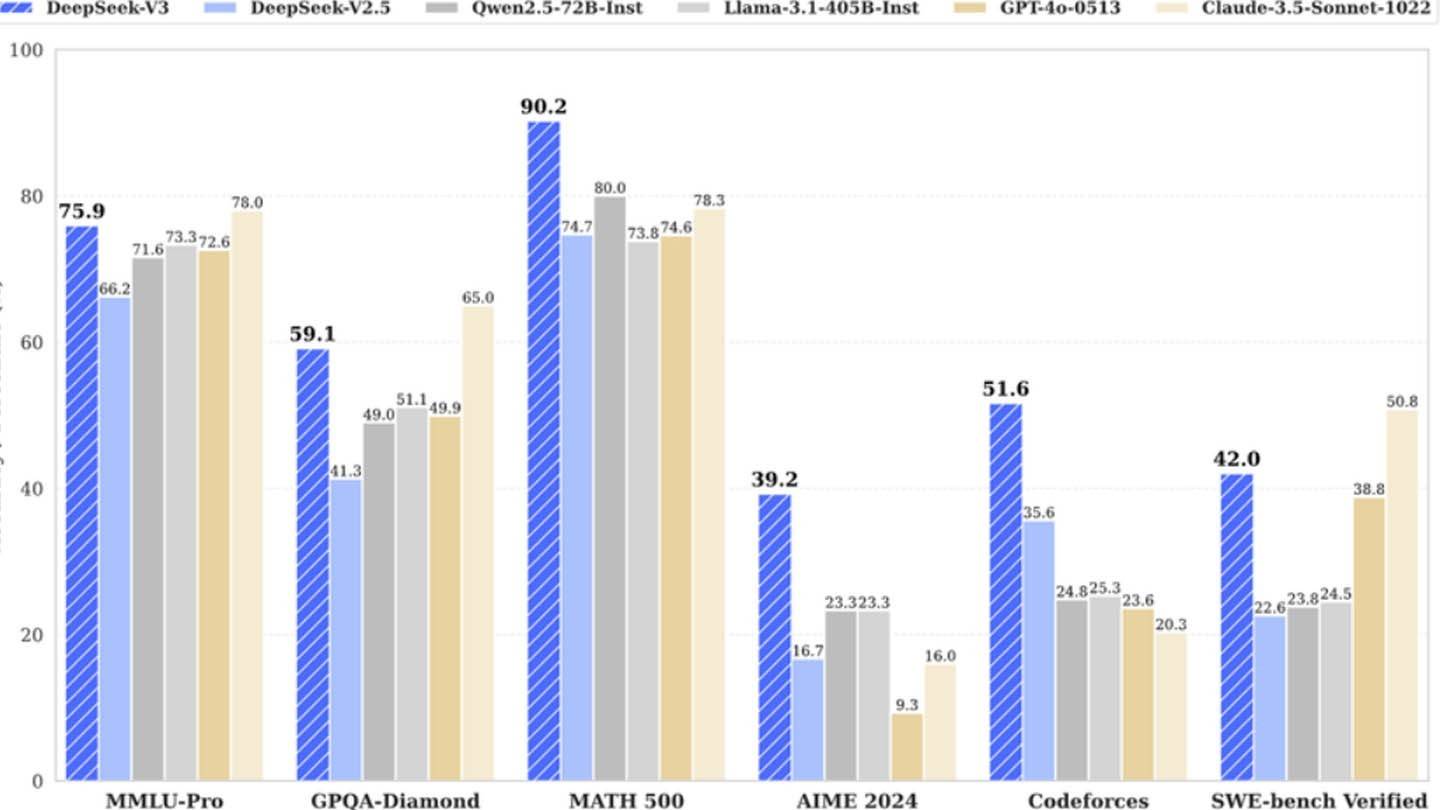

Today, DeepSeek's artificial intelligence has emerged as a formidable competitor in the market, notably contributing to one of NVIDIA's largest stock price drops.

Image: ensigame.com

Image: ensigame.com

What distinguishes this model are its innovative architecture and training methods. It utilizes several cutting-edge technologies:

Multi-token Prediction (MTP): This approach allows the model to forecast multiple words at once by analyzing different parts of a sentence, enhancing both accuracy and efficiency.

Mixture of Experts (MoE): DeepSeek's model employs a diverse array of neural networks to process input data. This architecture speeds up AI training and boosts performance. In DeepSeek V3, 256 neural networks are used, with eight activated for each token processing task.

Multi-head Latent Attention (MLA): This mechanism helps the AI focus on the most significant parts of a sentence. By repeatedly extracting key details from text fragments, MLA reduces the risk of missing important information, enabling the AI to better capture crucial nuances in the input data.

The leading Chinese startup DeepSeek boasts that they created a highly competitive AI model at a minimal cost, claiming to have spent only $6 million on training DeepSeek V3 using just 2048 graphics processors.

Image: ensigame.com

Image: ensigame.com

However, analysts from SemiAnalysis revealed that DeepSeek operates a substantial computational infrastructure, comprising around 50,000 Nvidia Hopper GPUs. This includes 10,000 H800 units, 10,000 advanced H100s, and additional H20 GPUs. These resources are spread across multiple data centers and used for AI training, research, and financial modeling.

The company's total investment in servers is approximately $1.6 billion, with operational expenses estimated at $944 million.

DeepSeek is a subsidiary of the Chinese hedge fund High-Flyer, which spun off the startup as a separate AI-focused division in 2023. Unlike most startups that rely on cloud providers, DeepSeek owns its data centers, providing full control over AI model optimization and enabling rapid innovation. The company remains self-funded, enhancing its flexibility and decision-making speed.

Image: ensigame.com

Image: ensigame.com

Moreover, some researchers at DeepSeek earn over $1.3 million annually, attracting top talent from leading Chinese universities (the company does not hire foreign specialists).

Given these facts, DeepSeek's claim of training its latest model for just $6 million appears unrealistic. This figure only covers the cost of GPU usage during pre-training and does not include research expenses, model refinement, data processing, or overall infrastructure costs.

Since its inception, DeepSeek has invested over $500 million in AI development. However, its lean structure allows it to implement AI innovations more actively and effectively than larger, more bureaucratic companies.

Image: ensigame.com

Image: ensigame.com

The example of DeepSeek illustrates that a well-funded, independent AI company can indeed compete with industry giants. Yet, experts note that the company's success is driven by billions in investments, technical breakthroughs, and a strong team, while claims about a "revolutionary budget" for AI model development are somewhat exaggerated.

Nevertheless, DeepSeek's costs remain lower than those of its competitors. For instance, DeepSeek spent $5 million on R1, whereas ChatGPT4o cost $100 million to train.